ESR14 - SPARS2019

SPARS2019

What is SPARS ?

This summer I attended the SPARS2019 conference. The Signal Processing with Adaptive Sparse Structured Representations (SPARS) workshop brings together people from statistics, engineering, mathematics, and computer science, working on the general area of sparsity-related techniques and computational methods, for high dimensional data analysis, signal processing, and related applications. With this wide range of scientists, conference topics are diverse:

- Compressive sensing & learning

- Representation learning (sparse coding, dictionary & deep learning)

- Low-rank approximation, matrix & tensor decomposition

- Phase retrieval, inverse problems with sparsity

- Sparsity in approximation theory, information theory or statistics

- Bayesian sparse modeling & inference optimization theory & algorithms for sparsity

- Sparse graph & network analysis

- Low-complexity/low-dimensional models

Reason for my presence

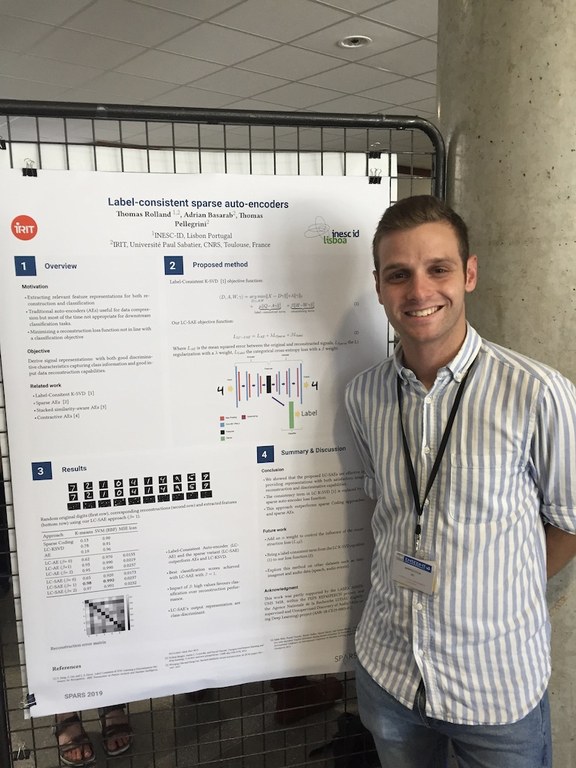

At this conference, I presented a poster about my master internship work: Label-Consistent AutoEncoder. AutoEncoders are useful for data compression but most of the time the representations they provide are not appropriate as it is for a downstream classification task. This is because they are trained to minimize a reconstruction error and not a classification loss. In this Label consistent AutoEncoders, I proposed a novel supervised version that integrates class information within the encoded representations directly in the loss function.

This conference gave me new insight into inverse problems, GAN, Sparse representation, and non-convex estimations. Also, some posters and lectures were on mathematical proofs that could be interesting to take into account in some machine learning application.

Toulouse

This year SPARS was in Toulouse, more than to be the place where I grew up, it is also a beautiful city with a perfect balance between history and innovation. I highly recommend you to pass by if you are in France for tourism. And seriously, look where the gala dinner was!